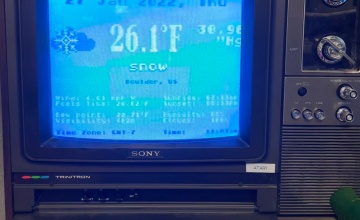

The Importance of Getting the Weather on Your Atari 800, or, Slow Networks Experiment 7

Happily, libi striegl and I are slowly getting back to our Slow Networks experiments in the Media Archaeology Lab we began in 2020 as a way to, well, stay sane during the pandemic and have a little fun. To inaugurate our return, today’s experiment involves learning how to use our Fujinet network adapter. We first […]

Read More…